Introduction and course organization

Connect Internet of Things (IoT) enabled devices using scalable cloud services in a project setup.

Devices that communicate with cloud services

You should come up with your own project idea, but here is some inspiration:

You don't have to solve complex problems and can also create something funny

Consider: The idea of your project does not matter so much, we want to learn workflow and technology here

You should have heard of

HTTP, TCP/IP, UDP, SSH, WebSockets, JSON, Git, Docker, API, Continuous Everything, Pull/Merge Request

| Description | Link |

|---|---|

| Gitlab Repositories Team Projects, Source Code, Issues, ... |

https://gitlab.mi.hdm-stuttgart.de/csiot/ss24 |

| Supplementary Code Repositories Code examples, emulator, ... |

https://gitlab.mi.hdm-stuttgart.de/csiot/supplementary |

The course takes place in presence.

If you want to participate remotely (e.g. due to covid infection, quarantine or other legitimate reasons), write us an email early enough. We'll ensure to bring the Meeting Owl and start the BBB-Stream. It's not a high-quality hybrid setup though, just sound and a shared screen.

| Date | Session (14:15 - 17:30) | Description |

|---|---|---|

| 19.03.2024 | Kickoff | Course overview, questions and answers |

| 26.03.2024 | Lecture + Idea Pitch | Pitch your project idea (if you have any). Do you already have a team? |

| 02.04.2024 | Lecture + Team Setup | Maximum of 6 teams (4-6 members) and 30 students in total Higher semesters take precedence over lower semesters |

| 09.04.2024 | Lecture | |

| 16.04.2024 | Lecture | |

| 30.04.2024 | Lecture | |

| 07.05.2024 | Working Session + Q&A | Questions regarding presentations at the beginning of the session |

| 14.05.2024 | Midterm presentations | |

| 28.05.2024 | Working Session | |

| 04.06.2024 | Working Session | |

| 11.06.2024 | Working Session | |

| 18.06.2024 | Working Session | |

| 25.06.2024 | Working Session + Q&A | Questions regarding presentations at the beginning of the session |

| 02.07.2024 | Final presentations | |

| 07.07.2024 (Sunday) | Project submission | Commits after the submission date will not be taken into account Make sure to check the submission guidlines |

| Group | Time |

|---|---|

| XXX | 14:15 - 14:30 |

| XXX | 14:30 - 14:45 |

| XXX | 14:45 - 15:00 |

| XXX | 15:00 - 15:15 |

| XXX | 15:15 - 15:30 |

| XXX | 15:30 - 15:45 |

Grading is based on the Gitlab repo in the csiot group https://gitlab.mi.hdm-stuttgart.de/groups/csiot

Add a README.md in the repo that contains:

The total of 50 Points is split into 4 categories.

Following general best practices is required for each category.

Although the grades are derived from the team, each individual gets a distinct grade that can differ from the other team members.

Code & Architecture (20 Points)

Tooling (15 Points)

Presentation (10 Points)

Technical documentation (5 Points)

The Center for Learning and Development, Central study guidance, VS aka student government support you:

Introduction

What do you think?

Cloud computing is the on-demand availability of computer system resources, without direct active management by the user.

| Service | Description | Examples |

|---|---|---|

| Infrastructure as a service (IaaS) | High-level API for physical computing resources | Virtual machines, block storage, objects storage, load balancers, networks, ... |

| Platform as a service (PaaS) | High-level application hosting with configuration | Databases, web servers, execution runtimes, development tools, ... |

| Software as a service (SaaS) | Hosted applications without configuration options | Email, file storage, games, project management, ... |

| Function as a service (FaaS) | High-level function hosting and execution | Image resizing, event based programming, ... |

What is Google Photos? iCloud? GitHub? Dropbox? GMail? EC2? Dynamo DB? Google Firebase? Lambda? Google App Engine? Hosted Kubernetes?

Introduction

What do you think?

A software with an architecture that leverages a multitude of cloud services.

An example app and web platform that allows friends from all over the world to collaboratively create a movie from their holidays

| Feature | Technical elements |

|---|---|

| Users can sign up to the platform using an eMail or a third party provider | Email, OAuth2 provider, relational data storage, ... |

| User can create holiday groups and invite friends | Relational data storage, caching, notification, ... |

| Friends can upload raw footage into holiday groups and tag it | Relational data storage, object storage, transcoding, queueing, search index, caching, notification, ... |

| Friends can edit the footage into a movie using an online editor | Object storage, transcoding, queueing, caching, notification, ... |

| For development and operations: | System monitoring and alerting, distributed logging, automated integration and deployment, global content distribution network, virtual network, system environments (development, staging, production, ...) |

Feature: Friends can upload raw footage into holiday groups and tag it

Introduction

| Conventional Infrastructure | Cloud Infrastructure |

|---|---|

| (Bare-metal) Servers, Type 1/2 Hypervisors, Containers | Cloud Resources |

| Long-living assets | Short resource life span |

| Own data center, Colocation, Rented dedicated servers | No own hardware |

| Direct physical access | No access on hardware |

Deployment, configuration, maintenance and teardown has to be automated

Developers need to understand the runtime environment

Operators need to understand some application layers

Service discovery, service configuration, authentification/authorization and monitoring

Integration, deployment, delivery

Scalability, reliability, geo replication, disaster recovery

Introduction

A coded representation for infrastructure preparation, allocation and configuration

Example: Custom OS image with pre-installed Docker on Hetzner Cloud using Packer

{

"builders": [

{

"type": "hcloud",

"token": "xxx",

"image": "debian-10",

"location": "nbg1",

"server_type": "cx11",

"ssh_username": "root"

}

],

"provisioners": [

{

"type": "shell",

"inline": [

"apt-get update",

"apt-get upgrade -y",

"curl -fsSL https://get.docker.com | sh"

]

}

]

}Example: Creating a S3 Bucket on AWS using Terraform

provider "aws" {

access_key = "xxx"

secret_key = "xxx"

region = "eu-central-1"

}

resource "aws_s3_bucket" "terraform-example" {

bucket = "aws-s3-terraform-example"

acl = "private"

}Example: Installing Docker and initializing a Swarm using an Ansible Playbook

- hosts: swarm-master

roles:

- geerlingguy.docker

tasks:

- pip:

name: docker

- docker_swarm:

state: present#cloud-config

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAGEA3FSyQwBI6Z+nCSjUUk8EEAnnkhXlukKoUPND/RRClWz2s5TCzIkd3Ou5+Cyz71X0XmazM3l5WgeErvtIwQMyT1KjNoMhoJMrJnWqQPOt5Q8zWd9qG7PBl9+eiH5qV7NZ mykey@host

- ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA3I7VUf2l5gSn5uavROsc5HRDpZdQueUq5ozemNSj8T7enqKHOEaFoU2VoPgGEWC9RyzSQVeyD6s7APMcE82EtmW4skVEgEGSbDc1pvxzxtchBj78hJP6Cf5TCMFSXw+Fz5rF1dR23QDbN1mkHs7adr8GW4kSWqU7Q7NDwfIrJJtO7Hi42GyXtvEONHbiRPOe8stqUly7MvUoN+5kfjBM8Qqpfl2+FNhTYWpMfYdPUnE7u536WqzFmsaqJctz3gBxH9Ex7dFtrxR4qiqEr9Qtlu3xGn7Bw07/+i1D+ey3ONkZLN+LQ714cgj8fRS4Hj29SCmXp5Kt5/82cD/VN3NtHw== smoser@brickiesimport pulumi

from pulumi_google_native.storage import v1 as storage

config = pulumi.Config()

project = config.require('project')

# Create a Google Cloud resource (Storage Bucket)

bucket_name = "pulumi-goog-native-bucket-py-01"

bucket = storage.Bucket('my-bucket', name=bucket_name, bucket=bucket_name, project=project)

# Export the bucket self-link

pulumi.export('bucket', bucket.self_link)| Continuous | Requires | Offers | Implementation |

|---|---|---|---|

| Integration | Devs need correct mindset Established workflows |

Avoids divergence Ensures integrity/runability |

Shared codebase Integration testing |

| Deployment | Automated deployment Access control / Permission management |

Ensures deployability | Infrastructure as Code Docker Swarm, Kubernetes, Nomad, ... |

| Delivery | Deployability Approval from Marketing, Sales, Customer care |

Rapid feature release cycles Small to no difference between environments |

Same as for continuous Deployment Release/Feature management |

Proper Monitoring/Alerting is essential when CD is applied

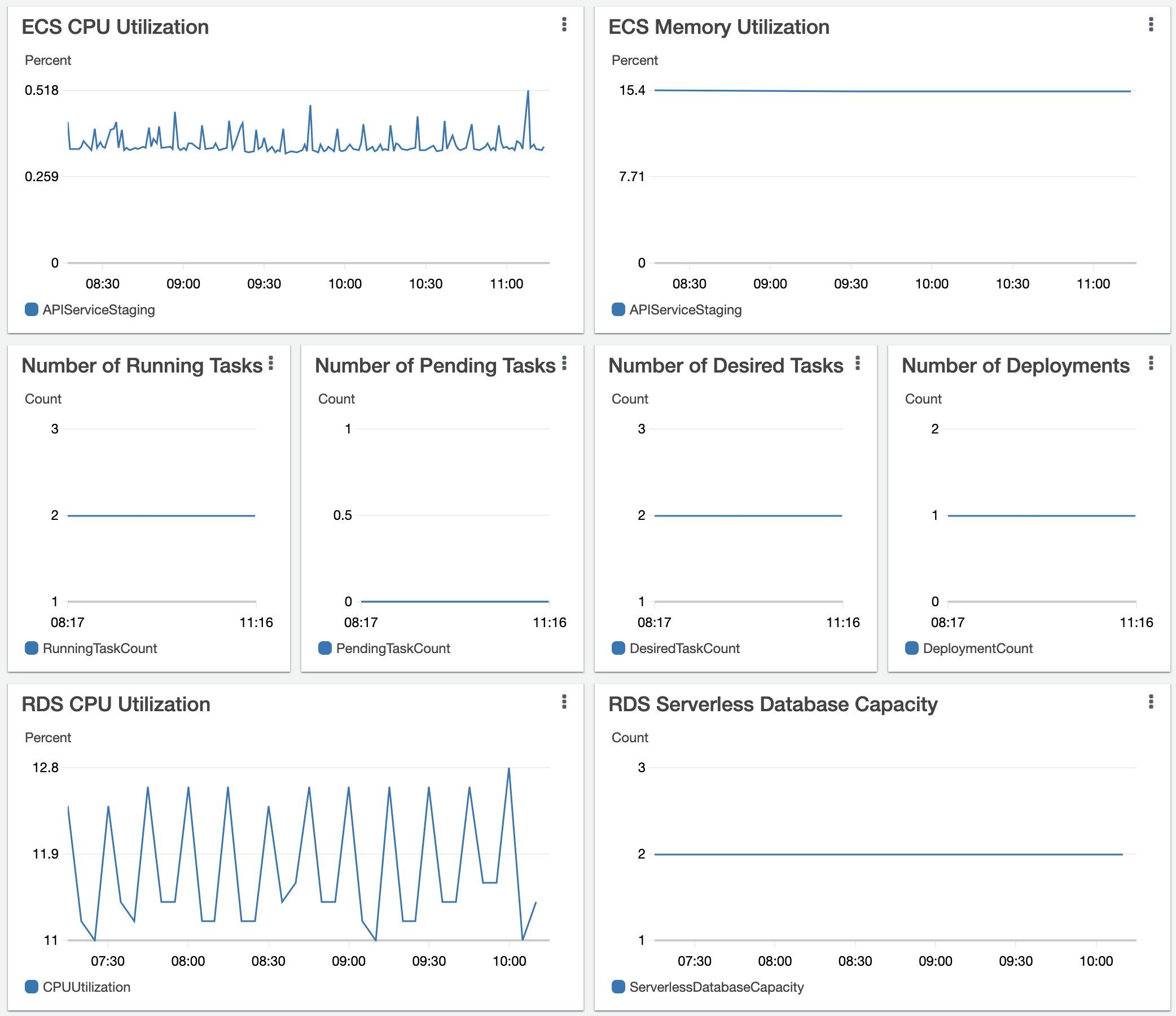

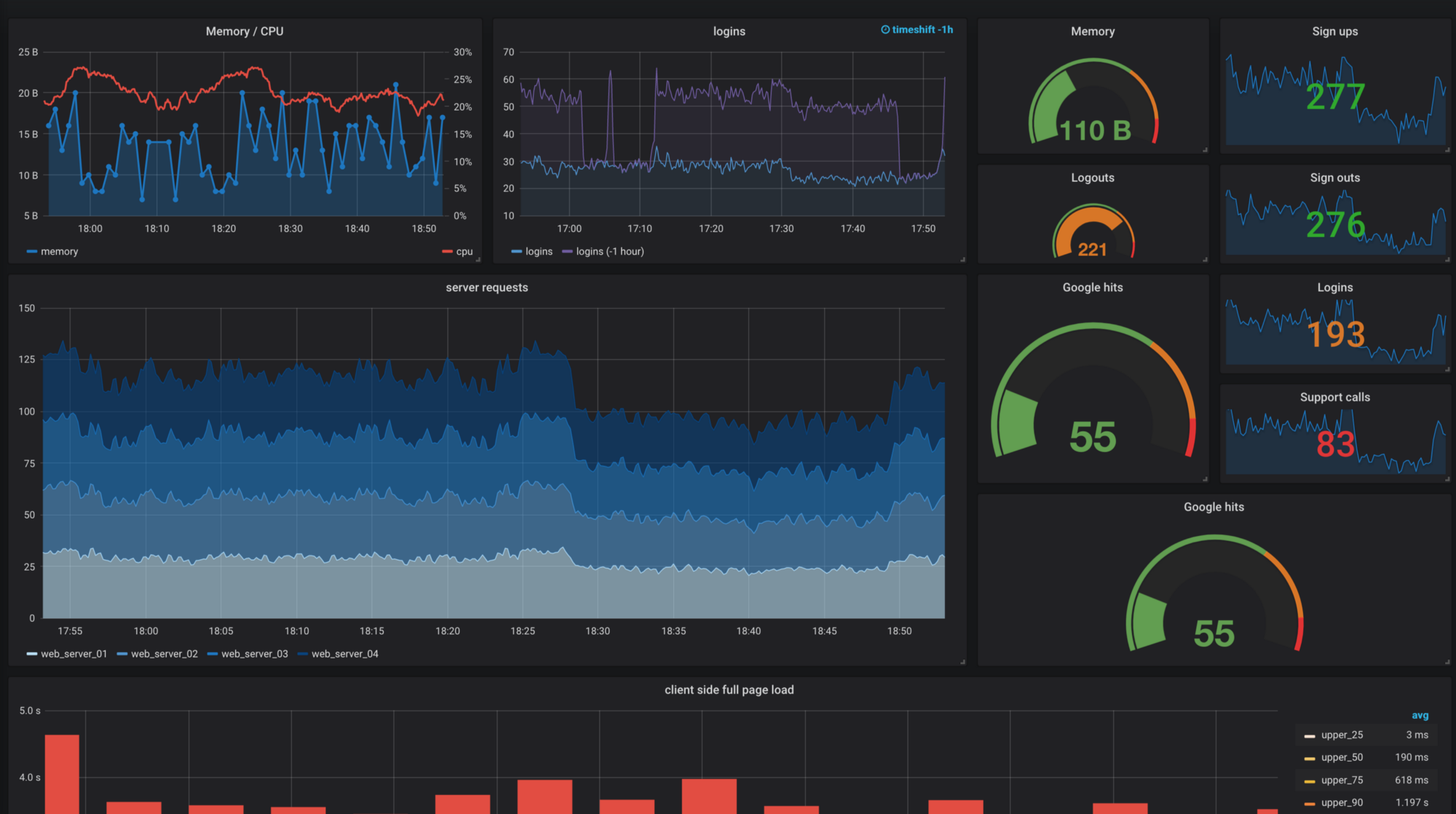

Service for time series data, logs and dashboards

Prometheus: Time series database, metric exporters, Alertmanager

Grafana: (Real-time) Dashboards for monitoring data, Alerting Engine

Introduction

What do you think?

A system of interrelated computing devices that can transfer data over a network without human interaction

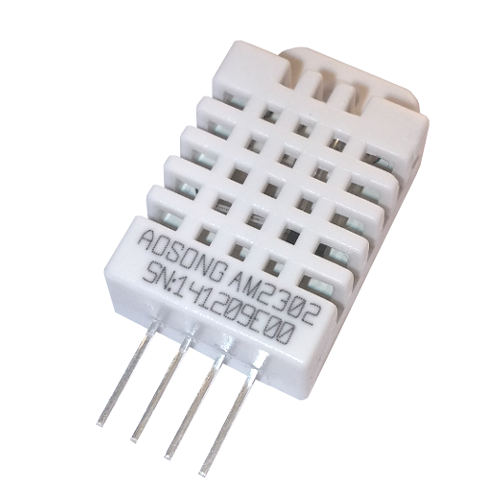

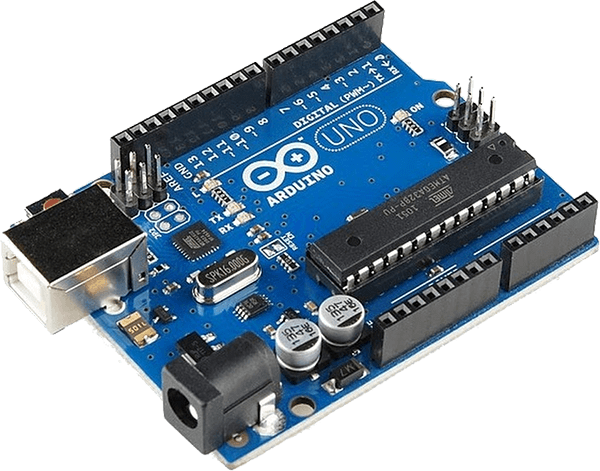

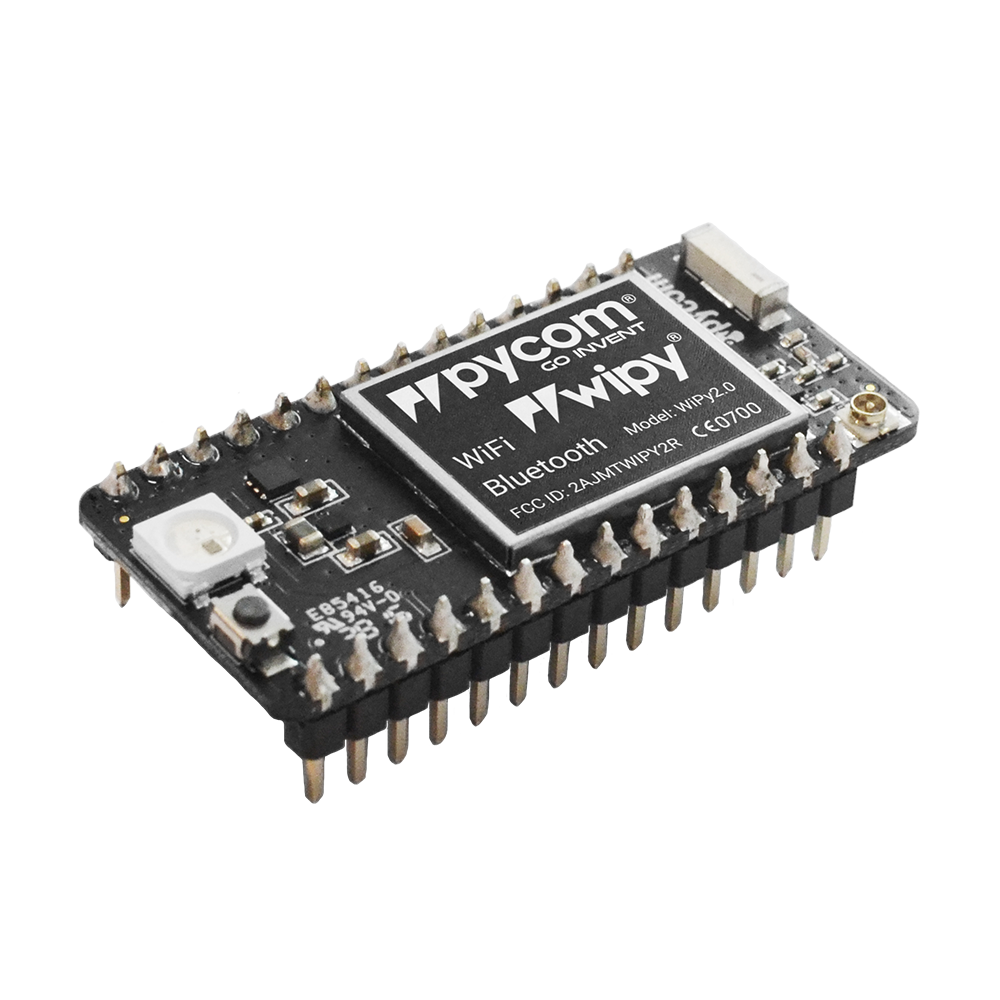

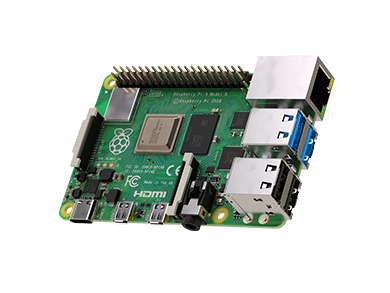

| Type | CPU (Max) | RAM | OS | TCP/IP | GPIO | ||

|---|---|---|---|---|---|---|---|

| DHT22 |  |

Sensor | - | - | - | ❌ | ❌ |

| Arduino (ATmega328P) |  |

MCU | 20 MHz 8-bit RISC | 2 KiB SRAM | - | ❌ | ✅ |

| ESP32 (Xtensa LX6) |  |

SoC | 2 * 240 MHz 32-bit RISC | 520 KiB SRAM | e.g. FreeRTOS | ✅ | ✅ |

| Raspberry Pi 4 (ARM Cortex-A72) |  |

SoC | 4 * 1.5 GHz 64-bit ARM | 4 GiB DDR4 | GNU/Linux | ✅ | ✅ |

| Random Gaming-PC |  |

PC | 8 (HT) * 5.0 GHz 64-bit x86 | 32 GiB DDR4 | e.g. GNU/Linux | ✅ | ❌ |

The following protocols are often used in an Internet of Things stack

| Name | Network Layer | Description |

|---|---|---|

| LoRa(WAN) | Layer 1/2 | Low power, long range, uses license-free radio frequencies |

| ZigBee | Layer 1/2 | Low power, 2.4 Ghz, 64 bit device identifier |

| 6LoWPAN | Layer 1/2 | Low power, 2.4 Ghz/ license-free radio frequencies, IPv6 addressing |

| Ethernet | Layer 1/2 | Frame based protocol, also used for the normal internet |

| 802.11 Wi-Fi | Layer 1/2 | Wireless local area network protocol, also used for the normal internet |

| IPv4 and IPv6 | Layer 3 | Packet based protocol, also used for the normal internet |

| Bluetooth LE | Layer 3 | Low energy, wireless personal area network protocol, different from normal bluetooth |

| MQTT | Layer 7 | Lightweight, Message Queuing Telemetry Transport protocol, publish-subscribe model |

Live demos and practical exercises to learn some new technologies

In the year 3000, space travel had become commonplace, and as humanity spread out across the galaxy, the demand for necessary goods on distant planets skyrocketed. The Evil Mining Corporation saw an opportunity and quickly established itself as the primary supplier of four crucial items: hydrogen, oxygen, WD40, and duct tape.

At first, the corporation's methods were praised for their efficiency. They implemented a sophisticated Internet of Things architecture that relied on MQTT to coordinate information across their network of mining sites and supply ships using the interplanetory GalaxyNet. The system allowed them to accurately track their inventory and ensure that the four essential items were always in stock and ready for shipment.

But as time passed, the corporation's true motives became clear. They were more interested in profits than the well-being of the planets they supplied. They began cutting corners and taking shortcuts that put entire populations at risk.

One day, a shipment of hydrogen destined for a small farming colony never arrived. The colony was left without power, and their crops began to wither and die. When they contacted the Evil Mining Corporation, they were met with silence. It was only then that they realized just how much power the corporation had over their lives.

Desperate, the farmers banded together and set out to uncover the truth behind the corporation's operations. What they found was shocking. The corporation had been exploiting the resources of every planet they supplied, leaving behind nothing but ecological devastation and poverty.

Enraged, the farmers decided to take matters into their own hands. They gathered all the WD40 and duct tape they could find and launched a coordinated attack on the corporation's headquarters. The battle was long and hard-fought, but in the end, the farmers emerged victorious.

With the Evil Mining Corporation defeated, the planets were finally able to rebuild and thrive. They established new supply chains that were transparent and fair, ensuring that the four essential items were always available to those who needed them. And they vowed to never again let greed and corruption take hold of their society.

Lightweight, publish-subscribe network protocol that transports messages between devices

Open OASIS and ISO standard (ISO/IEC PRF 20922)

Mosquitto is a popular lightweight server (broker)

Create a client, that publishes data to a channel. Create a client, that receives data from that channel. Both communicate by mqtt.

The mqtt server is TLS-secured. You'll find the certificates in the assignments repository. Here is an example, how to use them with paho and python:

import ssl

import paho.mqtt.client as mqtt

from random import randint

import json

# TLS

client = mqtt.Client(mqtt.CallbackAPIVersion.VERSION2)

client.tls_set(ca_certs="certificates/mosquitto.crt", certfile="certificates/auth.mosquitto.crt", keyfile="certificates/auth.mosquitto.key", cert_reqs=ssl.CERT_REQUIRED, tls_version=ssl.PROTOCOL_TLS, ciphers=None)

client.tls_insecure_set(True)

# Random

value = randint(0, 1000)

# JSON

data = { "key": value }

payload = json.dumps(data)csiot/lobby every 10 secondscsiot with csiot/+csiot/ containing your station name, e.g. csiot/mos-eisley for this dataList running containers

docker psRun a new container with alpine image

docker run -ti alpine sh

docker run -ti alpine:3.13 shRun a new container with nginx image

docker run -p 3333:80 nginxMap a folder as volume

docker run -p 3333:80 -v $PWD/website:/usr/share/nginx/html nginxRun a new container with node app

docker run -p 3333:3000 -v $PWD/app:/app node:alpine node /app/server.jsBuild custom image with node app

Dockerfile

FROM node:15-alpine

WORKDIR /app

COPY server.js .

CMD node server.jsBuild using Dockerfile and run

docker build -t csiot-app app

docker run -p 3333:3000 csiot-appBuild docker compose stack with 3 services

docker-compose.yml

services:

api:

build: ./app

ports:

- "3333:3000"

proxy:

image: nginx:1-alpine

redis:

image: redis:6-alpineRun full compose stack

docker compose upRun single container from compose stack

docker compose run api shUse docker-compose to start three station clients and one receiver. Copy your existing solution from Assignment 1 and extend it.

import os

my_string = os.environ["STRING_VARIABLE"]

my_integer = int(os.environ["INTEGER_VARIABLE"])We use an emulator to simulate hardware devices.

Check out https://gitlab.mi.hdm-stuttgart.de/csiot/supplementary/emulator.

The README explains how to use the emulator.

Use the emulator to simulate the state of supplies of a station. Modify sender to fetch emulator state and send it to the broker.

Simple example how to fetch data from a REST-API:

import urllib.request

def get(url):

try:

req = urllib.request.Request(url)

with urllib.request.urlopen(req) as f:

if f.status == 200:

return json.loads(f.read().decode('utf-8'))

except ConnectionRefusedError:

print(f'could not connect')

except:

print(f'unknown error')

return None

result = fetch('localhost:3333/path/to/route')

print(result)Use your previous assignment as a basis.

Build and maintain your own custom Certificate authority for client-authentication using Terraform.

Reguirements:

openssl to inspect the generated certificates, e.g. openssl x509 -in a-cert.pem -noout -text