Introduction and course organization

Connect Internet of Things (IoT) enabled devices using scalable cloud services in a project setup.

Devices that communicate with cloud services

You should come up with your own project idea, but here is some inspiration:

You don't have to solve complex problems and can also create something funny

Consider: The idea of your project does not matter so much, we want to learn workflow and technology here

You should have heard of

HTTP, TCP/IP, UDP, SSH, WebSockets, JSON, Git, Docker, API, Continuous Everything, Pull/Merge Request

| Description | Link |

|---|---|

| Gitlab Repositories Team Projects, Source Code, Issues, ... |

https://gitlab.mi.hdm-stuttgart.de/csiot/ss25 |

| Supplementary Code Repositories Code examples, emulator, ... |

https://gitlab.mi.hdm-stuttgart.de/csiot/supplementary |

The course takes place in presence.

If you want to participate remotely (e.g. due to covid infection, quarantine or other legitimate reasons), write us an email early enough. We'll ensure to bring the Meeting Owl and start the BBB-Stream. It's not a high-quality hybrid setup though, just sound and a shared screen.

| Date | Session (14:15 - 17:30) | Description |

|---|---|---|

| 18.03.2025 | Kickoff | Course overview, questions and answers |

| 25.03.2025 | Lecture + Idea Pitch | Pitch your project idea (if you have any). Do you already have a team? |

| 01.04.2025 | Lecture + Team Setup | Maximum of 6 teams (4-6 members) and 30 students in total Higher semesters take precedence over lower semesters |

| 08.04.2025 | Lecture | |

| 15.04.2025 | Lecture + Team Meetings | |

| 22.04.2025 | Lecture / Working Session | |

| 29.04.2025 | Working Session + Q&A | Questions regarding presentations at the beginning of the session |

| 06.05.2025 | Midterm presentations | |

| 13.05.2025 | Working Session + Team Meetings | |

| 20.05.2025 | Working Session | |

| 27.05.2025 | Working Session + Team Meetings | |

| 03.06.2025 | Working Session | |

| 17.06.2025 | Working Session + Team Meetings | |

| 24.06.2025 | Working Session + Q&A | Questions regarding presentations at the beginning of the session |

| 01.07.2025 | Final presentations | |

| 06.07.2025 (Sunday) | Project submission | Commits after the submission date will not be taken into account Make sure to check the submission guidlines |

| Group | Time |

|---|---|

| LiveSense | 14:30 - 14:45 |

| Bierferando | 14:50 - 15:05 |

| SmartRack | 15:10 - 15:25 |

| AeroTrack | 15:30 - 15:45 |

Grading is based on the Gitlab repo in the csiot group https://gitlab.mi.hdm-stuttgart.de/groups/csiot

Add a README.md in the repo that contains:

The total of 50 Points is split into 4 categories.

Following general best practices is required for each category.

Although the grades are derived from the team, each individual gets a distinct grade that can differ from the other team members.

Code & Architecture (20 Points)

Tooling (15 Points)

Presentation (10 Points)

Technical documentation (5 Points)

The Center for Learning and Development, Central study guidance, VS aka student government support you:

Introduction

What do you think?

Cloud computing is the on-demand availability of computer system resources, without direct active management by the user.

| Service | Description | Examples |

|---|---|---|

| Infrastructure as a service (IaaS) | High-level API for physical computing resources | Virtual machines, block storage, objects storage, load balancers, networks, ... |

| Platform as a service (PaaS) | High-level application hosting with configuration | Databases, web servers, execution runtimes, development tools, ... |

| Software as a service (SaaS) | Hosted applications without configuration options | Email, file storage, games, project management, ... |

| Function as a service (FaaS) | High-level function hosting and execution | Image resizing, event based programming, ... |

What is: Amazon Elastic Compute Cloud (EC2)? Adobe Creative Cloud? DynamoDB? OpenFaaS? Vercel Functions? Google Firebase? iCloud? GitHub? Vercel? Microsoft 365? Zoom? Azure Virtual Machines? Amazon S3? Gmail? Hosted Kubernetes? Knative? AWS Lambda? Shopify? Google Compute Engine (GCP)? Dropbox?

You have to know your application, requirements and use-cases!

Introduction

What do you think?

A software with an architecture that leverages a multitude of cloud services.

An example app and web platform that allows friends from all over the world to collaboratively create a movie from their holidays

| Feature | Technical elements |

|---|---|

| Users can sign up to the platform using an eMail or a third party provider | Email, OAuth2 provider, relational data storage, ... |

| User can create holiday groups and invite friends | Relational data storage, caching, notification, ... |

| Friends can upload raw footage into holiday groups and tag it | Relational data storage, object storage, transcoding, queueing, search index, caching, notification, ... |

| Friends can edit the footage into a movie using an online editor | Object storage, transcoding, queueing, caching, notification, ... |

| For development and operations: | System monitoring and alerting, distributed logging, automated integration and deployment, global content distribution network, virtual network, system environments (development, staging, production, ...) |

Feature: Friends can upload raw footage into holiday groups and tag it

Introduction

| Conventional Infrastructure | Cloud Infrastructure |

|---|---|

| (Bare-metal) Servers, Type 1/2 Hypervisors, Containers | Cloud Resources |

| Long-living assets | Short resource life span |

| Own data center, Colocation, Rented dedicated servers | No own hardware |

| Direct physical access | No access on hardware |

Deployment, configuration, maintenance and teardown has to be automated

Developers need to understand the runtime environment

Operators need to understand some application layers

Service discovery, service configuration, authentication/authorization and monitoring

Integration, deployment, delivery

Scalability, reliability, geo replication, disaster recovery

Introduction

A coded representation for infrastructure allocation and configuration

| Name | Is IaC | Automatisation | Declarative | Vendor Agnostic |

|---|---|---|---|---|

| Web Interface | ❌ | ❌ | ❌ | ❌ |

| CLI | ❌ | ✅ | ❌ | ❌ |

| SDK | ✅ | ✅ | ⚠️ | ❌ |

| IaC Tools | ✅ | ✅ | ✅ | ✅ |

Example: Creating a S3 Bucket on AWS using Terraform

provider "aws" {

access_key = "xxx"

secret_key = "xxx"

region = "eu-central-1"

}

resource "aws_s3_bucket" "terraform-example" {

bucket = "aws-s3-terraform-example"

acl = "private"

}Example: Creating a S3 Bucket on AWS using Pulumi

import pulumi

from pulumi_google_native.storage import v1 as storage

config = pulumi.Config()

project = config.require('project')

# Create a Google Cloud resource (Storage Bucket)

bucket_name = "pulumi-goog-native-bucket-py-01"

bucket = storage.Bucket('my-bucket', name=bucket_name, bucket=bucket_name, project=project)

# Export the bucket self-link

pulumi.export('bucket', bucket.self_link)| Continuous | Requires | Offers | Implementation |

|---|---|---|---|

| Integration | Devs need correct mindset Established workflows |

Avoids divergence Ensures integrity/runability |

Shared codebase Integration testing |

| Deployment | Automated deployment Access control / Permission management |

Ensures deployability | Infrastructure as Code Docker Swarm, Kubernetes, Nomad, ... |

| Delivery | Deployability Approval from Marketing, Sales, Customer care |

Rapid feature release cycles Small to no difference between environments |

Same as for continuous Deployment Release/Feature management |

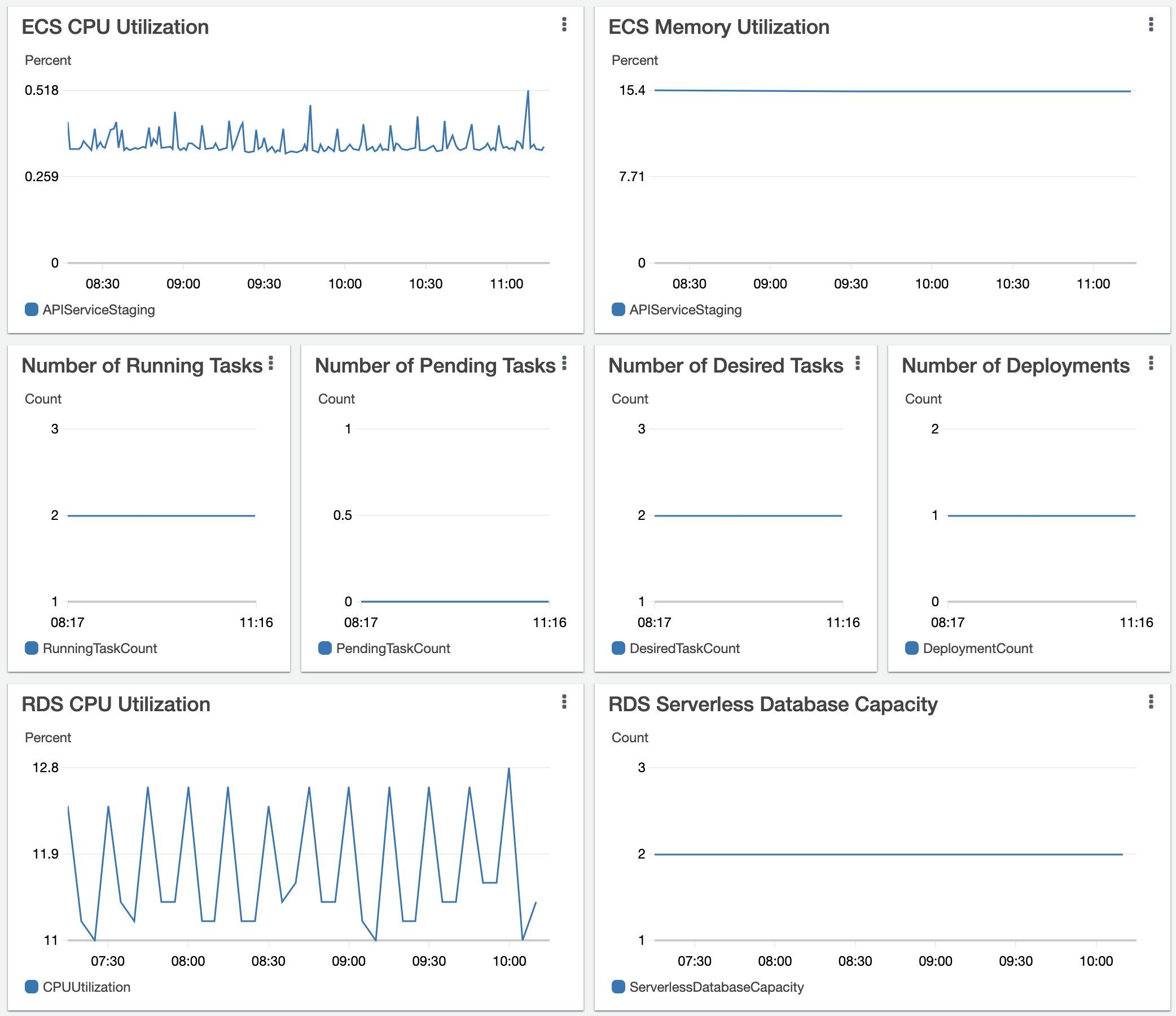

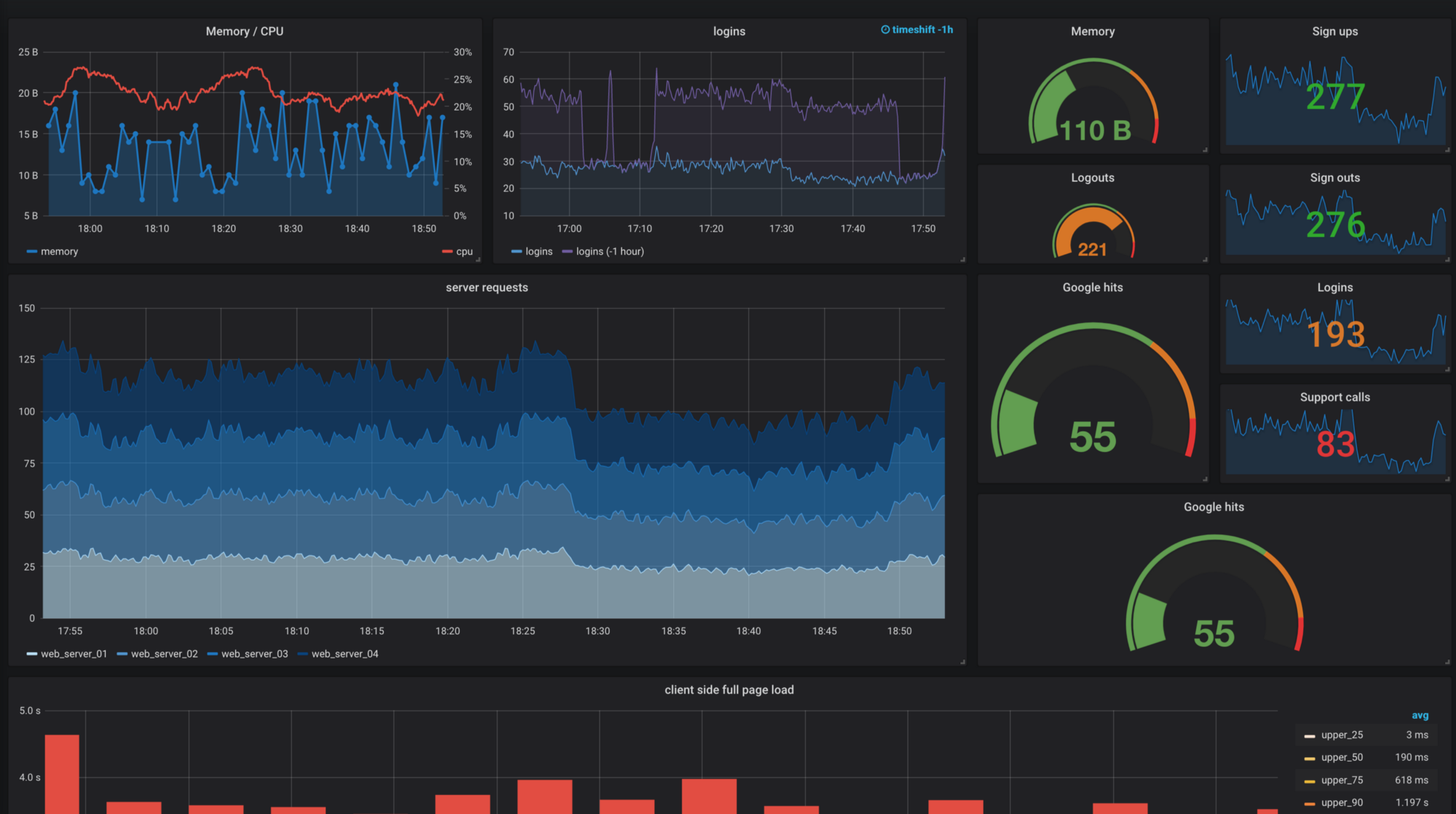

Proper Monitoring/Alerting is essential when CD is applied

Service for time series data, logs and dashboards

Prometheus: Time series database, metric exporters, Alertmanager

Grafana: (Real-time) Dashboards for monitoring data, Alerting Engine

Introduction

What do you think?

A system of interrelated computing devices that can transfer data over a network without human interaction

| Type | CPU (Max) | RAM | OS | TCP/IP | GPIO | ||

|---|---|---|---|---|---|---|---|

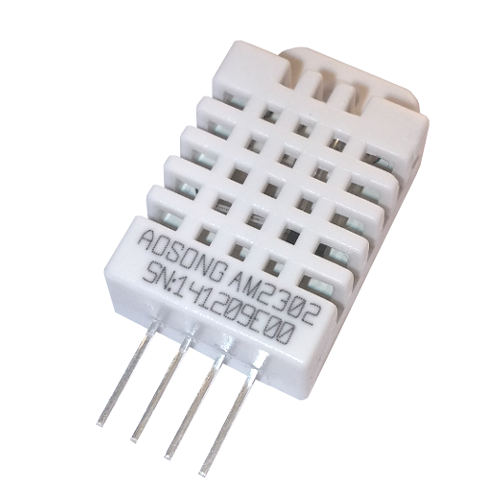

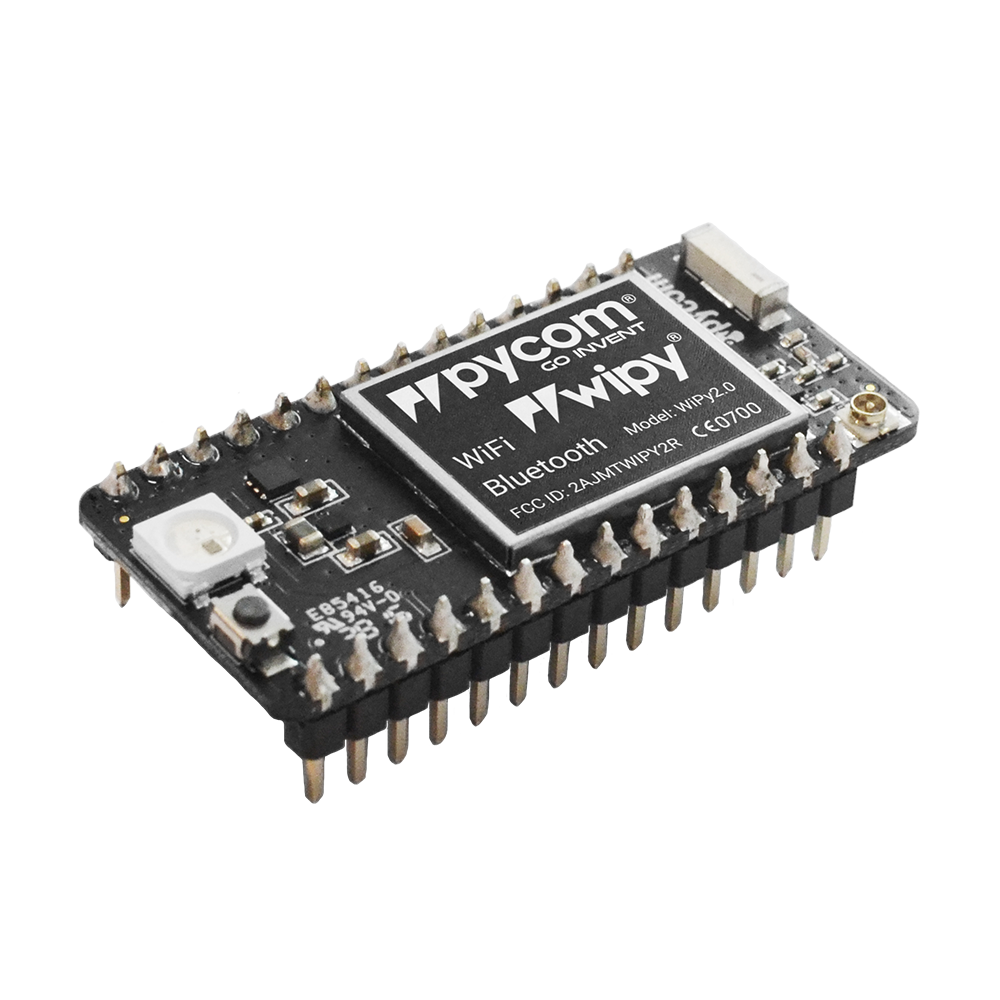

| DHT22 |  |

Sensor | - | - | - | ❌ | ❌ |

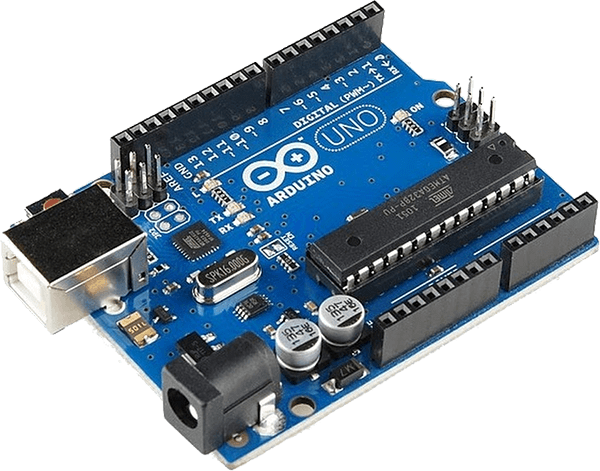

| Arduino (ATmega328P) |  |

MCU | 20 MHz 8-bit RISC | 2 KiB SRAM | - | ❌ | ✅ |

| ESP32 (Xtensa LX6) |  |

SoC | 2 * 240 MHz 32-bit RISC | 520 KiB SRAM | e.g. FreeRTOS | ✅ | ✅ |

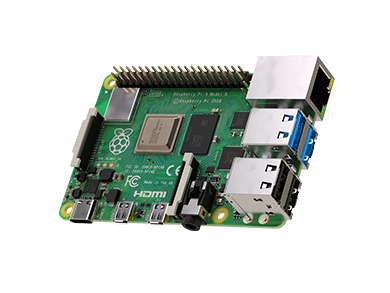

| Raspberry Pi 4 (ARM Cortex-A72) |  |

SoC | 4 * 1.5 GHz 64-bit ARM | 4 GiB DDR4 | GNU/Linux | ✅ | ✅ |

| Random Gaming-PC |  |

PC | 8 (HT) * 5.0 GHz 64-bit x86 | 32 GiB DDR4 | e.g. GNU/Linux | ✅ | ❌ |

The following protocols are often used in an Internet of Things stack

| Name | Network Layer | Description |

|---|---|---|

| LoRa(WAN) | Layer 1/2 | Low power, long range, uses license-free radio frequencies |

| ZigBee | Layer 1/2 | Low power, 2.4 Ghz, 64 bit device identifier |

| 6LoWPAN | Layer 1/2 | Low power, 2.4 Ghz/ license-free radio frequencies, IPv6 addressing |

| Ethernet | Layer 1/2 | Frame based protocol, also used for the normal internet |

| 802.11 Wi-Fi | Layer 1/2 | Wireless local area network protocol, also used for the normal internet |

| IPv4 and IPv6 | Layer 3 | Packet based protocol, also used for the normal internet |

| Bluetooth LE | Layer 3 | Low energy, wireless personal area network protocol, different from normal bluetooth |

| MQTT | Layer 7 | Lightweight, Message Queuing Telemetry Transport protocol, publish-subscribe model |

Lightweight, publish-subscribe network protocol that transports messages between devices

MQTT (Version 5) is a OASIS standard and ISO recommendation (ISO/IEC 20922)

Mosquitto is a popular lightweight server (broker)

What is...

X.509

openssl x509 -in cert.pem -noout -textopenssl s_client -connect emqx.services.mi.hdm-stuttgart.de:8883Important: Server and client certificate do NOT have to be signed by the same CA!

You don't have to use IaC to manage your account and users

Example policy for IAM users:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"iam:GetAccountPasswordPolicy",

"iam:ListUsers",

"iam:GetAccountSummary"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"iam:GetUser",

"iam:GetLoginProfile",

"iam:UpdateLoginProfile",

"iam:ChangePassword"

],

"Resource": "arn:aws:iam::*:user/${aws:username}"

},

{

"Effect": "Allow",

"Action": [

"iam:DeleteAccessKey",

"iam:GetAccessKeyLastUsed",

"iam:UpdateAccessKey",

"iam:CreateAccessKey",

"iam:ListAccessKeys"

],

"Resource": "arn:aws:iam::*:user/${aws:username}"

}

]

}Live demos and practical exercises to learn some new technologies

Before you start with the assignments, create a personal project in GitLab and invite us. Push your state regularly during the assignments and follow best practices (e.g. *.pem in .gitignore)

You should do all assignments yourself, of course you can help each other.

OneMillionPixels is a public canvas, where everyone can draw using MQTT messages.

https://pixels.services.mi.hdm-stuttgart.de

Broker

{

"type": "object",

"properties": {

"x": {

"type": "integer"

},

"y": {

"type": "integer"

},

"r": {

"type": "integer"

},

"g": {

"type": "integer"

},

"b": {

"type": "integer"

}

}

}Get familiar with the AWS IoT Core service

Getting started https://docs.aws.amazon.com/iot/latest/developerguide/iot-quick-start.html

Build your own solution (We want to publish and subscribe to AWS IoT Core with a custom script):

/csiot/{device_name}/temperature/csiot/#/temperatureYou can publish further data e.g. humidity and choose a more generic subscription to read all values (checkout the mqtt subscription syntax)

Build and maintain your own custom Certificate authority for client-authentication using Terraform.

Reguirements:

tls Terraform provider. Store the results with the local provider.openssl to inspect the generated certificates, e.g. openssl x509 -in a-cert.pem -noout -textWe are going to a build a deployment for distributed counters and displays. There are multiple counter devices and one or more display devices. The counter devices increment the count state by publishing to a AWS IoT Core topic. The display devices subscribe to that topic to display the current count.

Required resources:

Hints:

// AWS Root CA

data "http" "ca_pem" {

url = "https://www.amazontrust.com/repository/AmazonRootCA1.pem"

}

// IoT Core MQTT endpoint for your account

data "aws_iot_endpoint" "current" {

endpoint_type = "iot:Data-ATS"

}

Build a gateway software that:

Create a terraform module that:

Connect the gateway and the Iot Core thing to: